In our projects, I had a simple script named do.sh to quickly define and run tasks. I don’t remember why we didn’t use Makefile, but it had similar functionality. It’s just a BASH script: you can define environment variables, add functions and easily run other CLI commands. We wanted to be able to do that without having an extra dependency, so we could tell the developers, “Just download the script and run the command sh do.sh setup.” That’s it! It was much better than writing all the steps and other instructions in the README.md file.

One day, however, I decided to switch from Linux to Windows and start using PowerShell. This meant that I could no longer easily run all these BASH scripts. Also, I didn’t want to put in the extra effort for converting the scripts into PS1, so I created a new project in Python called DOSH. So I will download just a single program called dosh, I’ll write a single script in Lua language and it will work on all operating systems and shell types (Oh yeah, maybe that’s why I didn’t want to use Makefile, it’s not a real cross-platform tool actually). But a few new problems emerged with DOSH:

The zero-dependency rule. When it was a Bash script, you didn’t need to install anything extra to run it on MacOS and Linux (or even on Windows with WSL). I’m okay to download one program but it must be very easy installable. How can we do it in a Python application?

The need for continuous development. The needs never end. As with yt-dlp, everyone needs to grab a part of it for a special purpose and write a function for the project to have meaning. For example, I use tinypng to reduce the size of images on my blog. Instead, I would like to do it with just a command like

dosh compress-images, or to run it in each deployment automatically (dosh deploy-blog).

Let’s set the second point aside and return to our topic. How can we ensure the zero-dependency rule in a Python project? I recommend you take a quick look at the headings before you start reading.

The Purpose

I want to make an application that is easy to install and doesn’t require extra instructions to use. Meaning, I shouldn’t have to go to python.org and download Python to run the application. It should be self-executable. Our application can be a single file, making it easier to download and install, or it can consist of multiple files and directories within an archive file. Both methods have advantages and disadvantages. For example, our first choice will probably be to download a single-file application; but every time we run the command, it will lose time parsing and interpreting that file. For a desktop application, this delay might be negligible, but for a CLI application, waiting at the start, even for milliseconds, will become annoying at some point.

There’s another concept, it’s self-contained. In this method, as the name suggests, it’s not just the interpreter, but also the dependencies that are kept inside the package; it’s meant to have all the minimum requirements not just to start the program, but to run with all its functions. There’s a subtle but clear difference. Let me repeat: We need a Python interpreter for running a Python application. But the application also has dependencies. For example, if you use Qt for the interface and you haven’t installed Qt and its Python bindings on your computer, the application won’t run due to missing dependencies.

“Well, if you’re embedding the interpreter, why wouldn’t someone include the dependencies?” you might think. We don’t really use or need the concept of a shared library in Python. That’s a situation more needed in languages like C++, C#, and Java. You might have encountered this: You install a game from Steam, and when you try to run it, a popup opens saying “Please install Microsoft Visual C++ 2015 Runtime” blah blah, and the game closes. That is a shared library issue. Instead of embedding it into thousands of games one by one, you install it once, and all the games run using the same library. When there are many common components like this, it makes sense to use shared libraries to reduce the download cost per game.

Now, let’s decide on the method.

Which Method Should I Choose?

Let’s review the options we have. We’ll evaluate all options specifically for Python:

Using the Operating Systems’ Package Managers

WinGet on Windows, Homebrew on MacOS, and on Linux… a .deb package for Ubuntu, .rpm for Fedora, PKGBUILD in AUR for Arch Linux, or Flatpak, or Snap for all distros… There are too many package managers. Is it worth spending this much effort on a single application? It would be reasonable if we only needed to support MacOS. Alternatively, if you have a very strong community, everyone can contribute and make the app available to install via multiple package managers. However, the community would then be busy updating, maintaining and automating them. It also slightly bends the zero-dependency rule I mentioned at the beginning. You prepared the Homebrew package for macOS, but what if the user doesn’t use Homebrew? Some users may prefer MacPorts – should you create a package for that too? If you did, would it be easy to get it accepted into the repository? How long would it take?

In short, there might be special cases where it’s reasonable, but not for DOSH. This method is an example of self-executability.

Using Python Package Managers

Some applications may require a Python interpreter to be installed on the system. For example, you’re a research engineer and you use Anaconda. If my application is intended for Anaconda users, it might make sense to create a conda package. If I need to support other Python distros, uploading to PyPI might be more logical.

Since I designed DOSH as a language-independent application, this method didn’t work for me. However, there is one exception: astral-uv. Uv has -almost- every features that other Python package and dependency managers do. I mean, you can have users try your application without installing a Python interpreter, or even without installing your application beforehand. With just two steps, you will tell your users:

- Install Uv.

- Run this command:

uvx <your-application-command>

It’s quite simple; all you need is that your application should be released on PyPI.

This method is neither a self-executable nor a self-contained example. Because we are installing the interpreter, and trying to install its dependencies with its package manager, or we are just installing uv.

Zipapp

Frankly, I expect a self-contained application to also be self-executable, but zipapp is a method that falls a bit outside of this. You need an interpreter installation, but you don’t need to install any dependencies. Because thanks to zipapp, you create an archive package that contains all the dependencies.

When is this method useful? The first things that come to mind:

You have an internal company tool that you can’t send to PyPI, but you don’t want to deal with registry systems like CodeArtifact.

You want to just package and deploy the application for simple projects instead of dealing with Docker containerization.

Or you can use it as part of a code obfuscation process for an on-premise service. I’m not sure if it’s useful, need to investigate.

In short, this method also has areas where it’s reasonable; but not for DOSH.

PyInstaller, Nuitka, PyApp, and Others

As a final option, let’s review the projects made for preparing zero-dependency, ready-to-use packages. Why didn’t we look at these from the start? Because they are not as easy as they seem, they require extra effort and maintenance, and “there’s no silver bullet”. I mean, like the other options, none of these completely solves a problem. My advice is to try to solve the problem with the other simpler options as much as possible, and only when they don’t work, convince ourselves that tools like PyInstaller will solve the problem.

Each of these has a different approach and priority. You can make it a single file or a single directory. You can make it just self-executable and have the dependencies installed later. Or you can turn it into a completely offline-workable installation package. Some of these are automatically added to the executable path, making the command directly accessible; for others, you might need to move the directory or file to the correct path. Some can even solve code obfuscation problems themselves, which might be a reason for preference if you have concerns about code privacy.

But every option has a downside. Making it a single file reduces performance; if you only make it executable, you become dependent on the internet to install dependencies, and so on.

My Choice

Since DOSH is already an application that depends on the internet, I didn’t care about it being self-contained. All I wanted was to make an application that even someone who knows no Python at all can install and use. PyApp was the best in my opinion regarding CLI performance and the size of the created package, so I chose it. First, I wrote a BASH script to create the executable file:

export PYAPP_PROJECT_NAME="dosh"

export PYAPP_PROJECT_PATH="$(find $(pwd)/${{ inputs.dist-path }} -name "dosh*.whl" -type f | head -1)"

echo "Packaging DOSH binary: ${{ inputs.dosh-binary-name }}"

echo "Using wheel: ${PYAPP_PROJECT_PATH}"

# download pyapp source code, build it

curl https://github.com/ofek/pyapp/releases/latest/download/source.tar.gz -Lo pyapp-source.tar.gz

tar -xzf pyapp-source.tar.gz

mv pyapp-v* pyapp-latest

cd pyapp-latest

cargo build --release

# and rename the binary

mkdir -p ../${{ inputs.output-path }}

mv target/release/pyapp "../${{ inputs.output-path }}/${{ inputs.dosh-binary-name }}"

chmod +x "../${{ inputs.output-path }}/${{ inputs.dosh-binary-name }}"

echo "Binary packaged successfully at ${{ inputs.output-path }}/${{ inputs.dosh-binary-name }}"

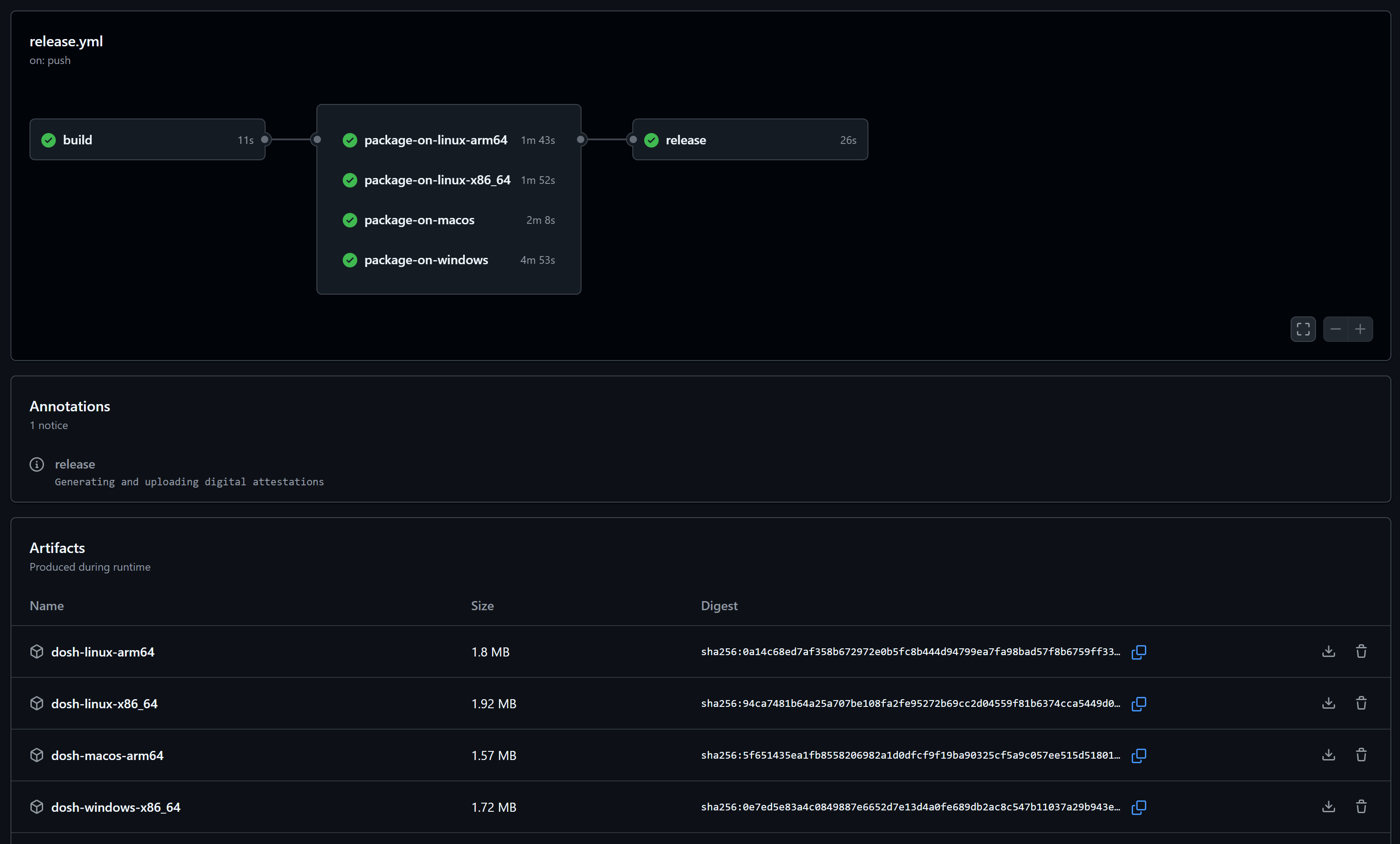

Next, I defined this script as an action to run automatically on Linux (x86_64, arm64), MacOS (Apple Silicon), and Windows (x86_64) platforms in GitHub Actions every time I release a new version. You can find the entire workflow here, I’m just showing an example from Linux:

package-on-linux-arm64:

runs-on: ubuntu-24.04-arm

needs:

- build

steps:

- uses: actions/checkout@v4

- uses: ./.github/actions/package-dosh

with:

dosh-binary-name: dosh-linux-arm64

dist-path: dist

output-path: bin

Every time I add a version tag, this workflow will run, create the binaries, and store these files as artifacts. By the way, don’t be fooled by the binary sizes; when you package an application with PyApp, it bootstraps the application when you first run it, so you are dependent on the internet at least for the first run. But for me, it’s an acceptable disadvantage:

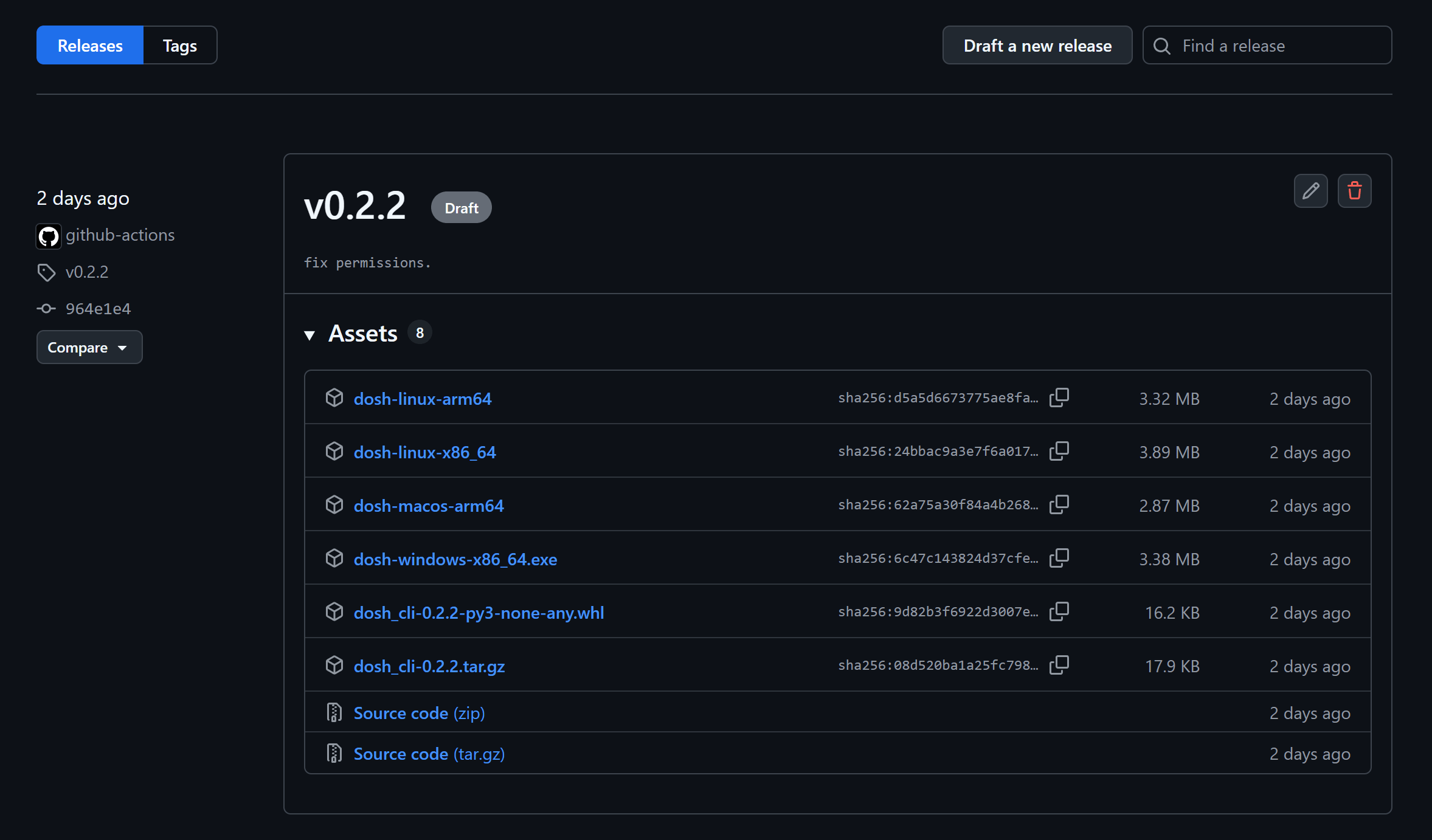

As the next step, we will publish these built executable files on GitHub Releases to make them available to the end-user:

- uses: ncipollo/release-action@v1

with:

artifacts: "dosh-*/dosh-*,release-dists/*"

Now the application is available to the end-user! They can download and use it. Since this is a CLI application, I created install.sh and install.ps1 scripts to provide a bit more practicality. This way, in the README.md file, I recommend users to install from the terminal:

sh <(curl https://raw.githubusercontent.com/gkmngrgn/dosh/main/install.sh)

For Windows users:

iwr https://raw.githubusercontent.com/gkmngrgn/dosh/main/install.ps1 -useb | iex

As a second option in the same workflow, I send the package to PyPI. I leave the user the chance to install the app with uv as a simple and backup installation alternative:

- name: Publish release distributions to PyPI

uses: pypa/gh-action-pypi-publish@release/v1

with:

packages-dir: release-dists/

$ alias dosh="uvx --from dosh-cli dosh"

$ dosh

Now, as long as there are no structural changes, I don’t need to spend any more effort on packaging.

Epilogue

When choosing your packaging method, you also need to consider licenses and code obfuscation if you are developing a closed-source project. I hope this has been a guide for those dealing with this problem.

DOSH is not yet a finished or ready-to-use project; however, I welcome your contributions if it interests you. You can reach me for your comments via social media or email.